Just last week, I wrote an article titled “Has Artificial Intelligence Hit a Ceiling?” where I speculated over whether AI would continue to make significant advancements in the near future, or whether it would stall out, as some skeptics suggested it would. I predicted that AI would continue to make rapid progress. As if to prove me right, OpenAI released a new model yesterday: o1.

The first question you might be wondering is why it’s called o1 instead of GPT-5. (If you’ll recall, their last model was called GPT-4.) The answer is that, while o1 shows major improvements in certain areas, it has shown minimal to no improvements in others. So, it’s not the kind of general-purpose upgrade like we saw with the jump from GPT-3 to GPT-4.

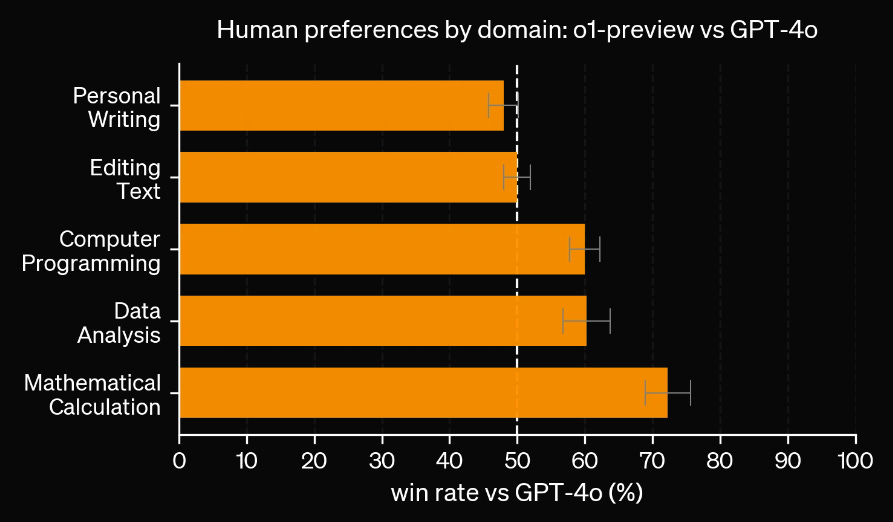

AI still struggles with many creative skills, while surpassing all but the very best humans in technical skills. Take a look at how o1 compares to GPT-4 on technical benchmarks (and keep in mind that GPT-4 was already better than most humans):

A couple notes I want to make:

OpenAI attributes most of the progress they’ve made to reinforcement learning and chain of thought methods rather than increases in compute or data — in other words, another win for unhobbling!

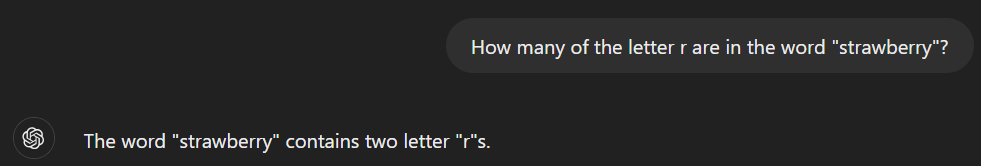

o1 is sometimes called the “strawberry” model in reference to a common meme on AI Twitter. Whenever you asked previous GPT models simple questions about words and letters, it would make obvious mistakes.

Somehow, despite being able to write essays and compete in math competitions, artificial intelligence was worse at letter counting than a kindergartener. Well, that is true no longer. Now, with the release of o1, we have finally solved the infamous strawberry problem.

[T]he authors [of the GPQA] took the world’s top experts from the most rigorous academic fields and told them to generate the hardest questions possible—questions so hard that many PhD holders struggle to answer them correctly. These questions were designed to be unique, requiring complex analysis of each issue. In other words, it was the type of test where you can’t just memorize facts or formulas or look up the answer; you have to think analytically within a very specific domain. […]

This is the Diamond Standard. If there is a better measure of intelligence than this, I don’t know what it is. If a program can consistently out-think the world's top scientists with years of training across multiple domains, then that program clearly possesses superhuman intelligence.

Well, we’ve officially crossed that threshold, and I am confident saying that we now have superintelligent machines. Granted, they are still narrowly superintelligent — only outperforming humans in select, albeit still very impressive, ways. We are still a ways away from general superintelligence, but I would say that this breakthrough brings us much closer.

Even in technical skills, AI does show some errors which an elite human would be able to catch.

, who I have disagreed with before but whose mathematics credentials are beyond dispute, lists various successes and failures of o1 in the fields of advanced math and combinatorics.Technical and creative skills are not wholly separable. o1 made modest but still noticeable gains in writing about English literature, law, and public relations. This suggests that while mimicking humans’ creative abilities may be more difficult, it is still possible with enough time, data, and algorithmic techniques.

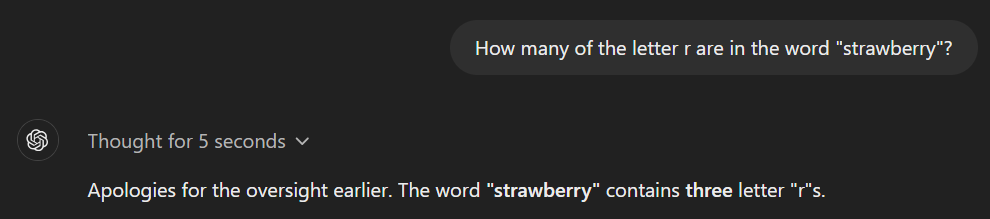

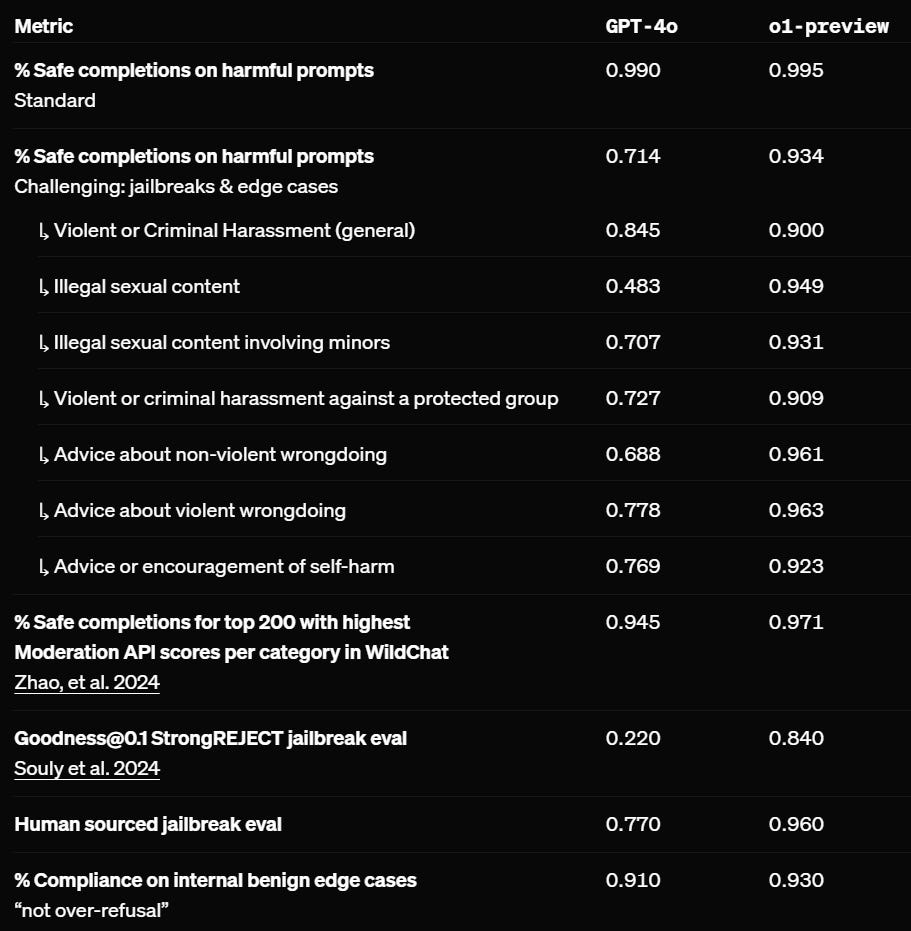

OpenAI, in its announcement of o1, touts how good the new model is from a safety perspective. They mean this in the sense that o1 outputs far less unwanted content than GPT-4 did (e.g. saying racial slurs or telling users to kill themselves):

While I am happy that OpenAI is addressing the mundane risks and misuses of AI, I am still skeptical that these accomplishments signify AI safety in any meaningful sense of the term.

Overall, this is a good day for OpenAI, and a good day for believers in AI progress. While we haven’t yet reached the GPT-5-level upgrade that many were hoping for, this is still a very significant breakthrough. I would bet on seeing many more model upgrades like this in upcoming months and years.