Pareto Improvements in AI Policy

Some win-win strategies to promote a better AI future

To quote Wikipedia:

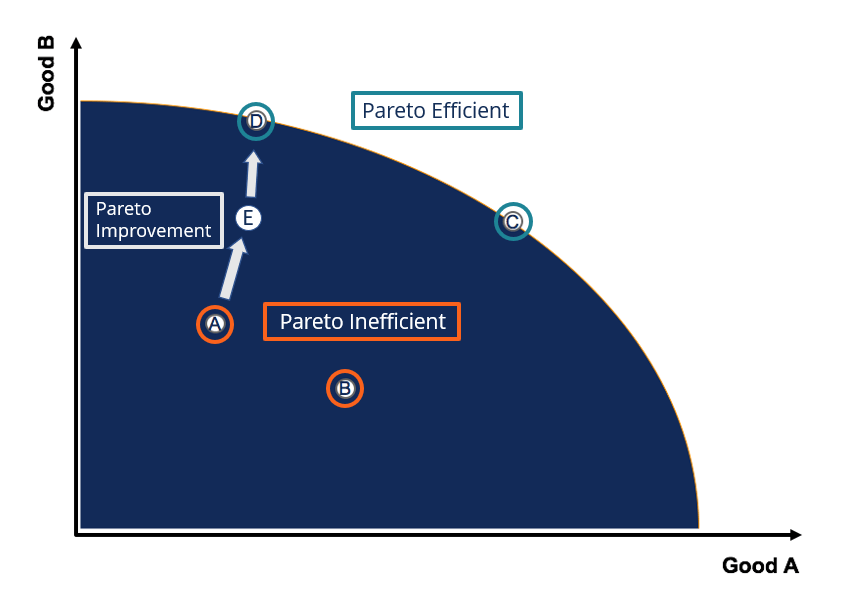

In welfare economics, a Pareto improvement formalizes the idea of an outcome being "better in every possible way". A change is called a Pareto improvement if it leaves at least one person in society better-off without leaving anyone else worse off than they were before. A situation is called Pareto efficient or Pareto optimal if all possible Pareto improvements have already been made; in other words, there are no longer any ways left to make one person better-off, without making some other person worse-off.

The current AI policy landscape is not Pareto optimal.

As I see it, there are currently three main interest groups with regards to AI policy:1

The AI Accelerationists, who believe that we ought to develop and implement AI as quickly as possible to harness its many benefits. Most leading AI companies are accelerationists, whether or not they explicitly identify as such.

The AI Safetyists, who believe that we ought to place guardrails on the development of AI in order to protect against various categories of AI risk. Chief among these is the risk of AI misalignment, which has the potential to destroy all of humanity.

The National Security Hawks, who believe that we (“we” meaning the United States, or the West more broadly) must stay ahead of China in the AI race. It is believed that whichever country is first to develop and implement AI effectively can gain a lasting upper hand on the world stage, militarily and economically.

(Full disclosure: I lean toward the AI safety camp, as I am very concerned about AI existential risks. Still, I am sympathetic to both the accelerationist and national security perspectives.)

Much can be said about the tensions and disagreements between these groups. AI accelerationists wish to develop AI more quickly, whereas AI safetyists generally wish to slow down AI development to focus on alignment research. AI accelerationists tend to favor a decentralized approach to AI development with a heavy emphasis on open-source models, whereas national security hawks are more inclined to centralize AI develop in order to protect American technologies from foreign infiltration and theft. Etc.

Yet, the point I want to emphasize in this post is that AI optimists and AI pessimists do not necessarily have to be in conflict. There are still many Pareto improvements to AI policy — policies that can benefit at least one of these groups’ objectives, while leaving none of them worse off. I view these as the low-hanging fruit of AI policy, some things that everybody should be able to agree on.

Increasing Public- and Private-Sector Support for AI Interpretability Research

Developing and Implementing More Secure Trackers for Advanced Microchips

Developing and Implementing Safeguards Against Malicious Uses of AI

Increasing Cybersecurity at Leading AI Labs

Increasing America’s Human Capital Through Immigration Reform and Education Incentives

Funding Public Compute Resources Like NAIRR

Developing More Meaningful and Reliable AI Benchmarks

Streamlining Permitting for New Energy Infrastructure

Increasing Public- and Private-Sector Support for AI Interpretability Research

AI interpretability, also known as mechanistic interpretability, is the study and development of methods that help humans understand how and why AI systems behave the way they do. As models become increasingly powerful and complex, their internal reasoning grows harder to follow — even for their creators. This opacity makes it difficult to catch failures before they happen, or to investigate them after the fact. Interpretability is therefore not a luxury or an academic curiosity; it is a prerequisite for deploying AI safely in high-stakes domains. Just as aviation regulators wouldn’t certify a new aircraft without understanding how it works, we should be wary of trusting AI systems that we cannot explain.

So how can we promote AI interpretability? I recommend reading Dario Amodei’s recent piece on the subject, since he describes both the challenges and solutions far more eloquently than I can. But two approaches he suggests are:

First, AI researchers in companies, academia, or nonprofits can accelerate interpretability by directly working on it. Interpretability gets less attention than the constant deluge of model releases, but it is arguably more important. […] Anthropic is doubling down on interpretability, and we have a goal of getting to “interpretability can reliably detect most model problems” by 2027. We are also investing in interpretability startups. But the chances of succeeding at this are greater if it is an effort that spans the whole scientific community. […] Interpretability is also a natural fit for academic and independent researchers: it has the flavor of basic science, and many parts of it can be studied without needing huge computational resources. To be clear, some independent researchers and academics do work on interpretability, but we need many more. […]

Second, governments can use light-touch rules to encourage the development of interpretability research and its application to addressing problems with frontier AI models. Given how nascent and undeveloped the practice of “AI MRI” is, it should be clear why it doesn’t make sense to regulate or mandate that companies conduct them, at least at this stage: it’s not even clear what a prospective law should ask companies to do. But a requirement for companies to transparently disclose their safety and security practices (their Responsible Scaling Policy, or RSP, and its execution), including how they’re using interpretability to test models before release, would allow companies to learn from each other while also making clear who is behaving more responsibly, fostering a “race to the top”. We’ve suggested safety/security/RSP transparency as a possible direction for California law in our response to the California frontier model task force (which itself mentions some of the same ideas). This concept could also be exported federally, or to other countries.

Improved interpretability is good for safety for obvious reasons — being able to interpret AI outputs can help us verify that AI systems are aligned, or alternatively, to identify alignment failures if and when they occur. It's good for national security since military leaders will be more able to use AI systems if they can trust that those systems are acting in America's best interest. It's also good for AI companies, since consumers will be more willing to use and pay for models that they can understand and trust. In some sectors like mortgage lending, companies are actually forbidden by law from using algorithms unless they can explain how those algorithms work. Thus, greater interpretability can enable more widespread and responsible deployment of AI systems across the economy, fostering innovation while maintaining accountability and trust.

Developing and Implementing More Secure Trackers for Advanced Microchips

Right now, after a microchip is sold, the producer of that microchip has no idea where the chip will ultimately wind up. Maybe the chip will enter the hands of a reliable and legitimate business. Or maybe the chip will end up in the hands of a Russian general, or an organized crime boss, or a Chinese researcher in Guangdong. This is not just a hypothetical musing. Despite the US government's best efforts to prevent it, an estimated tens of thousands of advanced chips are smuggled into China every year.

This problem can, at least hypothetically, be solved. In particular, my friend Tao Burga has been hard at work on the question of how to design more efficient microchip tracking and export controls. One part of doing this involves developing hardware-enabled mechanisms (HEMs) that can be attached to microchips so that the manufacturers can monitor where the chips are geographically and, if necessary, disable chips that have fallen into the wrong hands. Another part of doing this involves more rigorously enforcing pre-existing export bans. The current administration has neglected to stop the sale of millions of advanced microchips to China, which poses an existential risk to national security. That negligence can change, however.

If you care at all about America winning the AI arms race, this should be your top priority: Immediately and thoroughly root out chip smuggling. America’s dominance of global compute is perhaps the one durable lead we have over China in AI development, but that lead gets smaller every day that American chips are trafficked into mainland China. I dare say that this is the single most important and most cost-effective action that American leaders can take to promote national security.

This is beneficial for AI safety, since greater controls on chip diffusion can prevent bad actors from harnessing advanced AI capabilities. Export controls could even be good for acceleration, since a greater ability to identify malicious users will enable easier facilitation with legitimate users. By ensuring that advanced chips are only in the hands of trusted entities, we can potentially speed up collaborative AI research efforts among allies and vetted organizations.

Developing and Implementing Safeguards Against Malicious Uses of AI

The rapid advancement of AI technology has brought with it a host of new attack vectors. Deepfakes, sophisticated AI-generated audio and video content that can convincingly impersonate real people, have emerged as a significant threat to personal and institutional security. Similarly, AI-powered identity theft and cyber fraud techniques have become increasingly sophisticated, making it harder for individuals and organizations to protect themselves against financial and reputational damage.

We are in an arms race between criminals using AI to commit malicious actions, and institutions using AI to identify and prevent these actions. We must ensure that the trusted institutions win. This involves not only developing more advanced AI detection and prevention tools but also implementing robust policies and regulations to guide their use. For instance, technical standards like C2PA can be used across the internet to verify the validity of images, videos, and other content.

This would be good for AI safety because it protects common people from AI risks in their daily lives, shielding them from financial losses, reputational damage, and potential psychological harm caused by AI-enabled crimes. Moreover, the same safeguards that can protect against malicious humans using AI can also protect against potential future threats from autonomous AI agents. It’s good for national security because, by preventing AI-enabled threats, we can safeguard our people, critical infrastructure, and economic resources from both domestic and foreign adversaries who might leverage AI for espionage, sabotage, or other harmful activities. And of course, AI developers themselves benefit from a world with less fraud and uncertainty.

Increasing Cybersecurity at Leading AI Labs

Right now, cybersecurity is embarrassingly poor at America’s leading AI labs. It is almost trivially easy for foreign governments like China, or even ordinary criminal groups, to gain access to cutting-edge trade secrets. It is widely suspected that Chinese theft of American IP was at least partially responsible for DeepSeek r1’s success, which many American technologists assumed was impossible for the Chinese to achieve on their own.

Strengthening cybersecurity, and ensuring that only designated officials have access to the internal workings of AI systems, will go a long way to promoting trust and confidence in AI developers. This would involve massively investing in operational security, including advanced encryption methods, multi-factor authentication, and rigorous access controls. It may also necessitate a shift in the relationship between the government and companies in Silicon Valley, potentially involving closer collaboration on cybersecurity measures or even regulatory frameworks to ensure adequate protection of sensitive AI technologies.

Enhancing cybersecurity is crucial for AI safety because it prevents bad actors from accessing advanced AI systems and potentially misusing them. This will become especially critical once AI models potentially gain significant capabilities in areas related to chemical, biological, radiological, and nuclear (CBRN) technologies. Improved security measures are also beneficial for AI companies, since intellectual property theft disincentivizes product development and can lead to significant financial losses. Moreover, it's vital for national security, as it could prevent China and other foreign adversaries from accessing our confidential technologies, thereby maintaining the United States' competitive edge in AI development.

Increasing America’s Human Capital Through Immigration Reform and Education Incentives

We're bottlenecked by a lack of human capital in several key areas, particularly in advanced technology and scientific fields.

We can improve this through immigration reform: Make it easier for foreign students, especially those with STEM majors, to gain residency and work permits in the United States. Streamline the visa application process, extend post-study work permits, and create clearer pathways to permanent residency. Expand the H1B program, the O-1 visa program, and other opportunities for individuals with extraordinary abilities. We could even implement a points-based immigration system that prioritizes skills in high-demand fields like machine learning.

We can also improve it through education incentives: It's clear that America's STEM education system is flawed at all levels, from k-12 all the way to graduate programs. We ought to encourage more Americans to enter in-demand STEM professions like advanced mathematics, physics, and material sciences. This could be achieved through increased funding for STEM programs in schools, scholarships for students pursuing these fields, and partnerships between educational institutions and industry to make academic courses more relevant to real-world experience and job opportunities.

More funding for the NSF and other scientific grant-making agencies is crucial. If a smart and talented STEM researcher wants to study a particular topic, they should have the resources to do so. This includes not only increasing the overall budget for these agencies but also streamlining the grant application process and ensuring that relevant research areas receive support.

These measures would be better for AI companies because they would have more talented people to develop and deploy AI products. They would be better for AI safety because they would produce a larger pool of talented people who could perform technical safety research. And they would be better for national security because America will have more talented people to develop our cybersecurity and critical technologies. And of course, this influx of talent could lead to breakthroughs in other crucial areas such as clean energy, biotechnology, and space exploration, further accelerating scientific progress and strengthening America's position as a global leader in innovation.

(By the way, this is one of many reasons I’m disappointed with the current Trump Administration. Trump has scared away foreign academics through arbitrary arrests and detentions, and cut critical funding to scientific research programs like the NIH and NSF. These actions are reducing America’s human capital at a time when we need it more than ever, for practically no conceivable benefit. Trump is engineering a Pareto impairment to US policy.)

Funding Public Compute Resources Like NAIRR

Similar to the point above, talented academics who need access to computing power for their research should have it. Unfortunately at the moment, many researchers are constrained by lack of funding due to the immense costs of computation. But if the government were to step in and provide compute to those in need, these researchers could become far more productive.

The National AI Research Resource (NAIRR) is a proposed initiative in the United States aimed at democratizing access to the resources essential for AI research and development. NAIRR would provide researchers and students across the country with access to computing power, large-scale datasets, educational tools, and user support. To kickstart NAIRR, policymakers should increase its funding and create partnerships with private sector companies to leverage their resources.

This would be most obviously beneficial for AI safetyists, since it would enable researchers to focus on safety research even when that research is not incentivized by the market. But it would also be beneficial to AI companies themselves, since the companies would be able to take advantage of basic research performed with these resources. This could lead to a virtuous cycle where public research informs private development, and private sector innovations feed back into academic research.

Developing More Meaningful and Reliable AI Benchmarks

We cannot build a better system unless we have some way to measure what a better system looks like. We cannot measure what a better system looks like without concrete benchmarks. In particular, we need benchmarks that A) meaningfully measure intelligence and capabilities and B) cannot be gamed by mere imitation and reward-hacking.

For at least a year, it has been difficult to understand or talk about AI progress, since so many of the benchmarks that we once used to measure AI progress have become saturated. This saturation occurs when AI systems achieve near-perfect scores on tasks that were once considered challenging. As a result, these benchmarks no longer provide meaningful distinctions between different AI systems or accurately reflect the state of AI capabilities.

Organizations like METR and the ARC Prize Foundation are devoted to creating new benchmarks, but more work needs to be done. Many existing evaluations still focus on narrow tasks with clear right answers, which leaves us blind to the broader, more nuanced aspects of intelligence — such as reasoning under uncertainty, forming long-term plans, or resisting deceptive behavior. We need benchmarks that stress-test models in these areas, ideally in ways that cannot be solved through superficial pattern-matching. Additionally, these benchmarks should be robust to distributional shift and adversarial optimization — otherwise, we risk building models that look good on paper but fail in deployment.

The government can help in several ways. First, agencies like NIST can expand their role in AI evaluation by convening expert panels to define benchmark desiderata, curate challenge datasets, and audit the results of submitted models. Second, competitive grant programs can fund academic and nonprofit researchers to develop benchmarks specifically targeted at failure modes relevant to national security, critical infrastructure, and high-stakes decision-making. Third, the government can require that benchmark results be reported as part of pre-deployment safety disclosures — for instance, by including them in Responsible Scaling Policies. This would create both an incentive to build better benchmarks and a public accountability mechanism to ensure they’re being used.

This would be good for AI companies since more accurate benchmarks can enable developers to see where they need to improve. It would be good for AI safety since more accurate benchmarks can enable people to identity blind spots and alignment failures. And it would be good for national security since more accurate benchmarks can enable military leaders to identify gaps in our capabilities and thus more efficiently allocate resources.

Streamlining Permitting for New Energy Infrastructure

This is an issue that predates the AI boom, but which AI has made all the more relevant in recent years. AI data centers require tremendous amounts of energy that our energy grid is currently unequipped to handle. For context, a single large-scale AI training run can consume more electricity than 100 U.S. households use in an entire year. In the worst cases, this surging demand could lead to skyrocketing energy costs and could exacerbate climate change by reviving unclean energy sources like coal. We can do better.

For too long, America has been held back by an overly strict permitting system that makes it harder to build up energy infrastructure. Take, for example, the Inflation Reduction Act (IRA) passed in 2022. Despite allocating nearly $370 billion for clean energy and climate initiatives, the actual construction of new energy projects has been sluggish. The Department of Energy reported that as of early 2023, only a fraction of the funded projects had broken ground due to permitting delays and regulatory hurdles. This bottleneck is not just frustrating; it's actively hindering our ability to meet growing energy demands and transition to cleaner sources.

With permitting reform — especially for clean energy sources like solar and nuclear power — we can both address climate change, make America more energy dominant, and bring energy prices down for average consumers. Streamlining the approval process for new transmission lines, for instance, could accelerate the integration of renewable energy into the grid. Similarly, removing burdens around advanced nuclear reactors could pave the way for safer, more efficient power generation.

In other words, we need an Abundance Agenda for AI energy use!

Conclusion

There are certainly many places where AI policy advocates disagree with each other, but at the core, we all want many of the same things. We all want a world where people’s lives become easier and more prosperous due to AI. We all want a world where people are protected from dangerous AI capabilities. And we all want a world where technology makes us safer from, rather than more vulnerable to, foreign enemies and adversaries.

What I have outlined above are common-sense policies that will make all parties better off than they are today. I urge leaders in both the public and private sector to see that there is still substantial room for mutually beneficial improvement, and to act accordingly.

When I say “policy”, I mean any action that leaders use to guide the development of AI. That includes not only laws and government regulations, but also corporate policies, academic research agendas, etc.