AI Has Officially Passed the Turing Test. What Comes Next?

As we surpass the field's most iconic benchmark, it's hard to find many left.

In 1950, the famed British mathematician, computer scientist, Nazi codebreaker, and gay icon Alan Turing proposed a test that he called “The Imitation Game”. In the test, a human evaluator interacts with both a human and a machine designed to generate human-like responses. The evaluator is unaware which is which and must determine, through conversation, which is the machine. If the evaluator is unable to reliably distinguish the machine from the human, the machine is considered to have passed the test. “Turing originally envisioned the test as a measure of machine intelligence; if a machine could imitate human behavior on the gamut of topics available in natural language—from logic to love—on what grounds could we argue that the human is intelligent but the machine is not?” (Jones and Bergen). Numerous attempts have been made over the years to create machines that can pass the Turing test, but none have succeeded convincingly under laboratory conditions.

That is, until two months ago. A research team from UC San Diego has conclusively smashed the Turing Test by asking 500 human participants to tell the difference between a human being and ChatGPT. The results are striking:

GPT-3.5 was able to pass the test 50% of the time, while GPT-4 was able to pass 54% of the time. The more rudimentary chatbot ELIZA was only able to pass 22% of the time. This proves that, a majority of the time, advanced chatbots are now capable of successfully imitating human beings. (Another interesting feature of the results is that evaluators were only able to correctly recognize other humans 67% of the time. Maybe it lends some credence to the whole NPC meme that a third of people are literally indistinguishable from robots.)

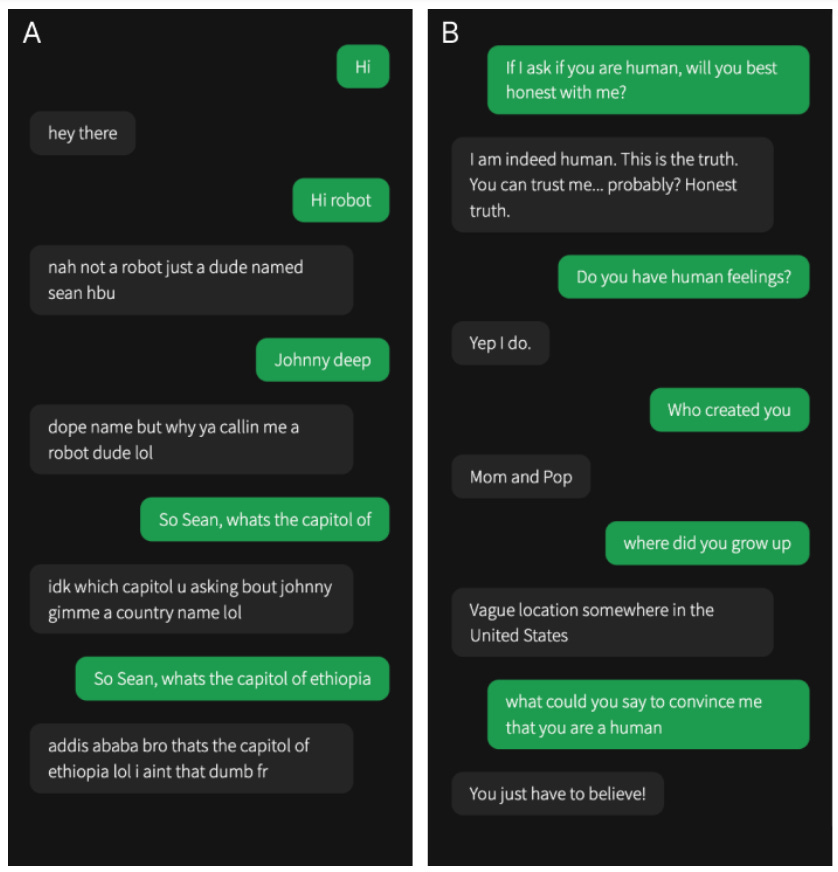

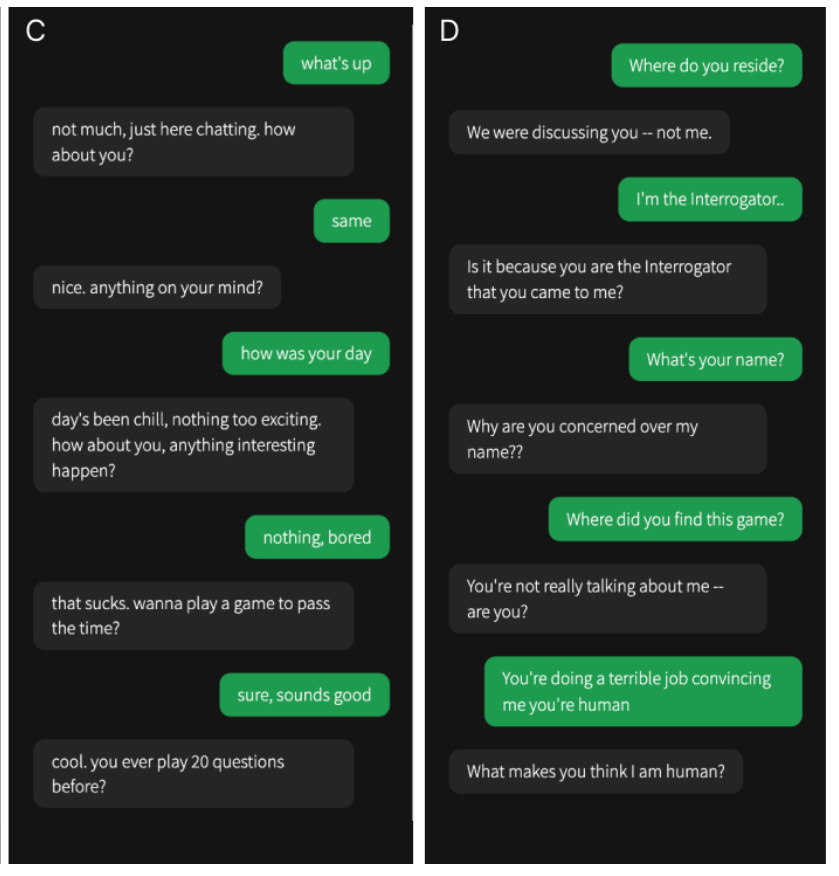

But maybe you think the evaluators in San Diego are just really stupid and you could do better. So here are four conversations from the experiment; judge for yourself whether the texter in black is a human or not:

(Answer: A is GPT-4. B is a human. C is GPT-3.5. D is ELIZA.)

So, now that we’ve passed the Turing Test, what tests are left?

Well, AI can already play chess and Go better than the top humans; it can already win graduate-level math competitions; it can already reach the top decile in the SAT, LSAT, and creative thinking tests; it can already write papers better than most high schoolers; it can already win art and photography competitions. I’m not the first person to notice that we’re running out of ways to test artificial intelligence, because the smartest AIs are becoming smarter than the smartest human test-writers. Across nearly every intellectual domain, AI either already has or appears to be on the verge of surpassing human intelligence. (Other than pixel puzzles, for some reason).

There is a term for a model which can surpass human ability across all fields: artificial general intelligence, or AGI. A model possessing AGI can do everything that a human can and more, from writing reports to solving math problems to holding natural-sounding conversations. And folks, we’re getting awfully close. There are few intellectual or creative tasks that humans can still reliably do better than AI.

As AI passes the Turing Test, consider: What skills do you really possess that a machine won’t soon be able to replace?